自然言語処理 BERTモデル | 文章分類

Transformers are taking the natural language processing (NLP) world by storm. In this document, I'll teach you all about this go-to architecture for NLP and computer vision tasks and must-have skill in your Artificial Intelligence toolkit. I will use a hands-on approach to show you the basics of working with transformers in NLP and production. I go over BERT model sizes, bias in BERT, and how BERT was trained. I will explore transfer learning, shows you how to use the BERT model and tokenization, and covers text classification.

1. NLP and Transformers

Transformer used in NLP

Have you seen the terms Bert or GPT-3 in articles online and wonder what they mean? These are examples of large language models, and their underlying architecture is based on the transform architecture.

Transformers

- proposed by a team of researchers from Google in 2017

- paper called "Attention Is All You Need."

- turning point in NLP.

Transformer in production

- Bidirectional

- Encoder

- Representations from

- Transformers

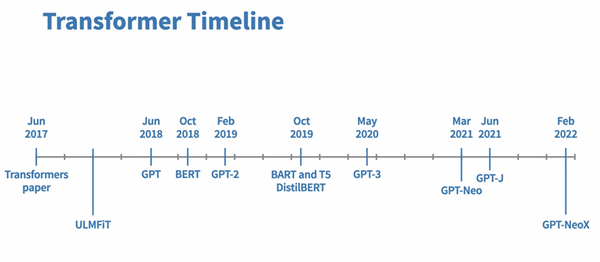

Transformer history

BERT model sizes

2. BERT and Transfer Learning

Bias in BERT

How was BERT trained?

- English Wikipedia » 2.5 billion words,

- BookCorpus » 800 million words.

What tasks was BERT Trained on

- masked language modeling (MLM) The MLM task requires BERT to predict the masked-out word » BERT is conceptually blank and empirically powerful.

- next sentence prediction (NSP) The next sentence prediction task asks the question » Does the second sentence follow immediately after the first?

BERT is conceptually simple and empirically powerful.

It obtains new state-of-the-art results on 11 natural language processing tasks.

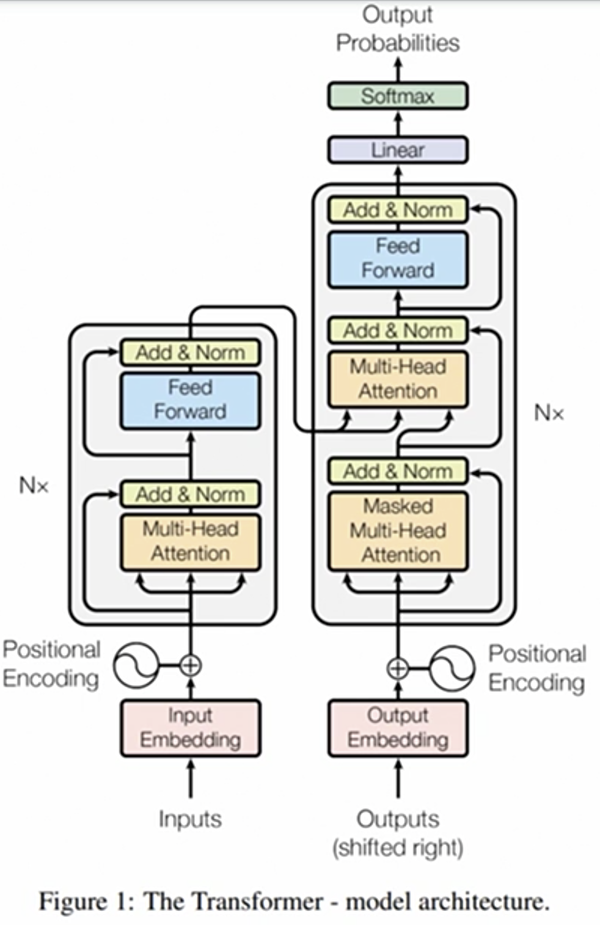

3. Transformer Architecture and BERT

3.1 Transformer: Archietcture overview

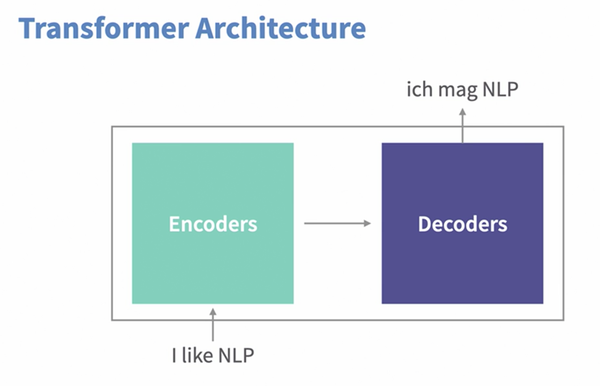

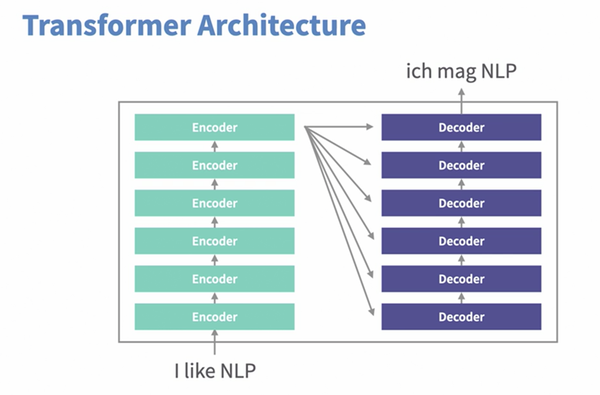

1. Encoder-Decoder Model

- Generative tasks

- BART

- T5

2. Encoder-Only Model

- Understanding of input

- Sentence calssification

- Named entity recognition

- Family of BERT models

- BERT

- RoBERT

- DistilBERT

3. Decoder-Only Model

- Generative task

- Examples:

- GPT

- GPT-2

- GPT-3

Tasks BERT Can Do

- Text classification

- Named entiry recognition

- Question answering

Tasks BERT Does Not Do

- Text generation

- Text translation

- Text summarization